Chatbot users often recommend treating a series of prompts like a conversation, but have you ever wondered how the chatbot knows what you are referring back to? Well, a recent study has shed light on the mechanism used by transformer models, such as those driving modern chatbots, to decide what to pay attention to. This groundbreaking research was conducted by Samet Oymak, an assistant professor of electrical and computer engineering at the University of Michigan. The study, which will be presented at the Neural Information Processing Systems Conference, has mathematically demonstrated how transformers learn to identify key topics and aggregate relevant information within lengthy texts.

Transformer architectures, first proposed in 2017, have completely revolutionized natural language processing by excelling at consuming extensive amounts of text. These models, like GPT-4, are capable of handling even whole books. Unlike their predecessors, transformers break down the text into smaller pieces, also known as tokens, which are then processed in parallel. However, they still retain the contextual understanding of each word. In fact, the GPT large language model spent years digesting internet text before becoming the chatbot sensation it is today.

The key to transformers lies in their attention mechanism, which determines the most relevant information. Oymak’s research team found that transformers employ an old-school technique to perform this task. They discovered that transformers utilize a support vector machine (SVM) concept, which was invented 30 years ago. SVM is commonly used for tasks like sentiment analysis in customer reviews, where it sets a boundary to categorize data as either positive or negative. Similarly, transformers use an SVM-like mechanism to make decisions on what to pay attention to and what to ignore.

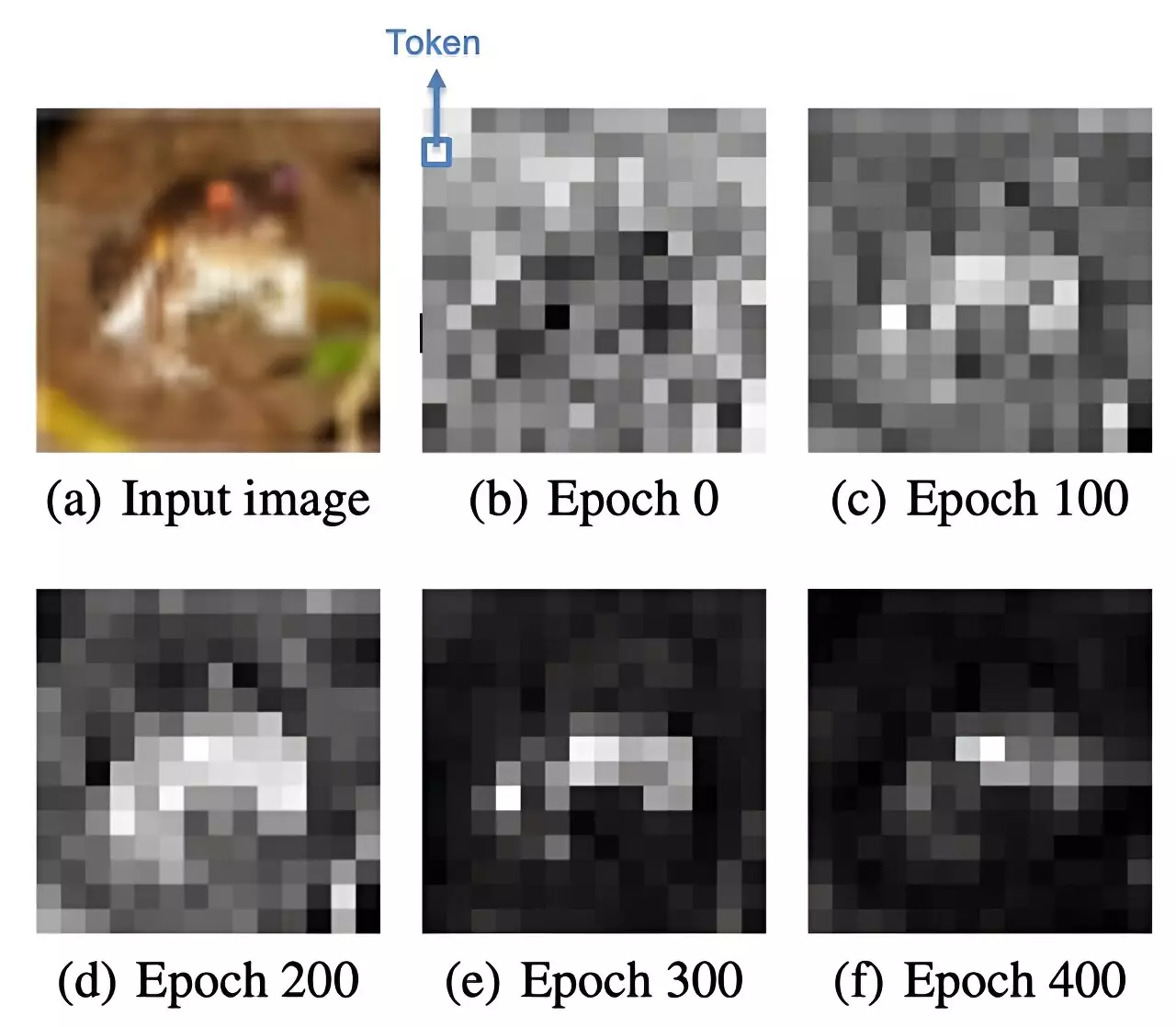

Despite the conversational experience that chatbots like ChatGPT provide, they actually rely on multidimensional mathematics. Each token of text is converted into a vector, which is essentially a string of numbers. When you enter a prompt, ChatGPT uses its attention mechanism to assign weights to each vector and subsequently decide which words and combinations to consider for formulating a response. It predicts the next word in a response based on the previous words, eventually creating a complete response. Even in a conversation that feels like a continuation, ChatGPT actually revisits the entire conversation and assigns new weights to each token before generating a response. This is how it gives the impression of recalling earlier statements and enables it to summarize interactions effectively.

One of the limitations of transformer architectures is that they lack an explicit threshold for determining what information to prioritize. This is where the SVM-like mechanism steps in, bringing a level of interpretability to the process. While black box models, such as transformers, have gained popularity despite being poorly understood, Oymak’s study is groundbreaking because it offers a clearer understanding of how the attention mechanism can pinpoint and retrieve relevant information from a vast body of text. Oymak emphasizes that this research is crucial, as black box models become more widespread.

The findings of this study have significant implications for the future development of large language models. By understanding how transformers allocate attention and retrieve information, researchers can work towards making these models more efficient and interpretable. Furthermore, Oymak anticipates that this knowledge will be invaluable for other domains that rely on attention, such as perception, image processing, and audio processing. The team’s future plans include presenting a second paper that delves deeper into the topic. Titled “Transformers as Support Vector Machines,” this paper will be presented at the Mathematics of Modern Machine Learning workshop at NeurIPS 2023.

The study conducted by Samet Oymak and his team has brought forth a breakthrough in our understanding of transformer models. By revealing the role of the attention mechanism and its connection to support vector machines, this research paves the way for more efficient and interpretable natural language processing models. With the ever-increasing reliance on AI in various fields, this study not only sheds light on the inner workings of chatbots but also sets the stage for advancements across the realm of artificial intelligence.

Leave a Reply