Creating versatile humanoid robots has always been a challenge in the field of robotics. Traditional control systems have proven to be inflexible, designed for specific tasks and unable to handle the unpredictability of real-world terrains and visual conditions. This rigidity limits the robots’ utility, confining them to controlled environments. However, there has been a growing interest in learning-based methods for robotic control, which can dynamically adapt their behavior based on data from simulations or direct interaction with the environment.

Researchers at the University of California, Berkeley, have developed a breakthrough control system for humanoid robots that addresses the challenges of flexibility and adaptability. Inspired by deep learning frameworks that revolutionized large language models, this AI system leverages the principle of studying recent observations to predict future states and actions.

The control system, trained entirely in simulation, demonstrates robust performance in unpredictable real-world settings. By analyzing its past interactions, the AI dynamically refines its behavior to effectively tackle novel scenarios that it has never encountered before. This innovative control system opens up new possibilities for humanoid robots as valuable assistants capable of navigating the world and assisting in various physical and cognitive tasks.

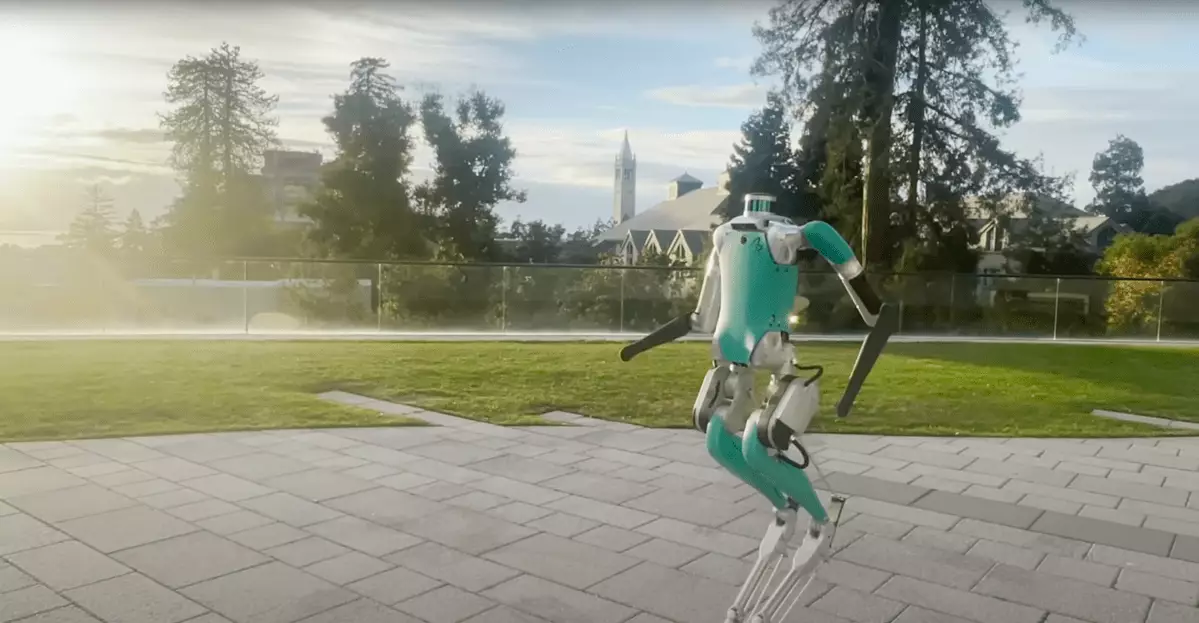

The control system’s adaptability extends to various terrains and environments. Tested on Digit, a full-sized humanoid robot, the system showcases remarkable outdoor walking capabilities. It navigates reliably across everyday human environments such as walkways, sidewalks, running tracks, and open fields. It can traverse different surfaces like concrete, rubber, and grass without falling.

The robot also exhibits resilience to disturbances and unexpected obstacles. It can successfully handle unexpected steps, random objects in its path, and even objects thrown at it. Furthermore, it maintains its pose and stability when pushed or pulled, showcasing its robustness in the face of disruptions.

One of the most intriguing aspects of this new control system is the process of training and deploying the AI model. The control model underwent extensive training in simulation on thousands of domains and tens of billions of scenarios using a high-performance GPU-based physics simulation environment called Isaac Gym. The simulated experience was then seamlessly transferred to the real world without the need for further fine-tuning, through a process known as sim-to-real transfer.

During real-world operation, the system demonstrated emergent abilities, handling complex scenarios such as navigating steps, which were not explicitly covered during its training. The heart of the system is a “causal transformer,” a deep learning model that processes the history of proprioceptive observations and actions. This transformer excels at discerning the relevance of specific information, such as gait patterns and contact states, to the robot’s observations.

Transformers, known for their effectiveness in large language models, possess a unique capability to predict elements in extensive data sequences. The causal transformer utilized in this control system excels at learning from sequences of observations and actions, allowing it to predict the consequences of actions with high precision. It can dynamically adjust its actions based on the landscape, even if it has never encountered it before.

The researchers hypothesize that the history of observations and actions implicitly encodes information about the world that a powerful transformer model can use to adapt its behavior dynamically at test time. They refer to this concept as “in-context adaptation,” which mirrors how language models use context to learn new tasks and refine their outputs during inference.

Transformers have proven to be superior learners compared to other sequential models such as temporal convolutional networks (TCN) and long short-term memory networks (LSTM). Their architecture allows for scaling with additional data and computational power and can be enhanced through the integration of extra input modalities.

Over the past year, transformers have become a significant asset to the robotics community. Their versatility has been leveraged to augment robots in various capacities, including improved encoding and mixing of different modalities and translating high-level natural language instructions to specific planning steps for robots. The researchers believe that transformers will facilitate future progress in scaling learning approaches for real-world humanoid locomotion.

The breakthrough control system developed by researchers at the University of California, Berkeley, has paved the way for versatile humanoid robots capable of navigating a variety of terrains and environments. By leveraging deep learning and sim-to-real transfer, the control system exhibits unprecedented adaptability and resilience. The power of transformers allows the system to dynamically adjust its behavior based on the environment, even in novel scenarios. With this advancement, the future of humanoid robotics looks promising as these robots can become valuable assistants in various domains, from personal assistance to industrial applications.

Leave a Reply