The use of artificial intelligence technology in political campaigns has raised concerns regarding the spread of misinformation and the potential influence on voters. OpenAI made a decision in January to ban the use of its technology for creating chatbots that impersonate political candidates or disseminate false information related to voting. This move was aimed at safeguarding the integrity of political discourse and combatting the manipulation of public opinion.

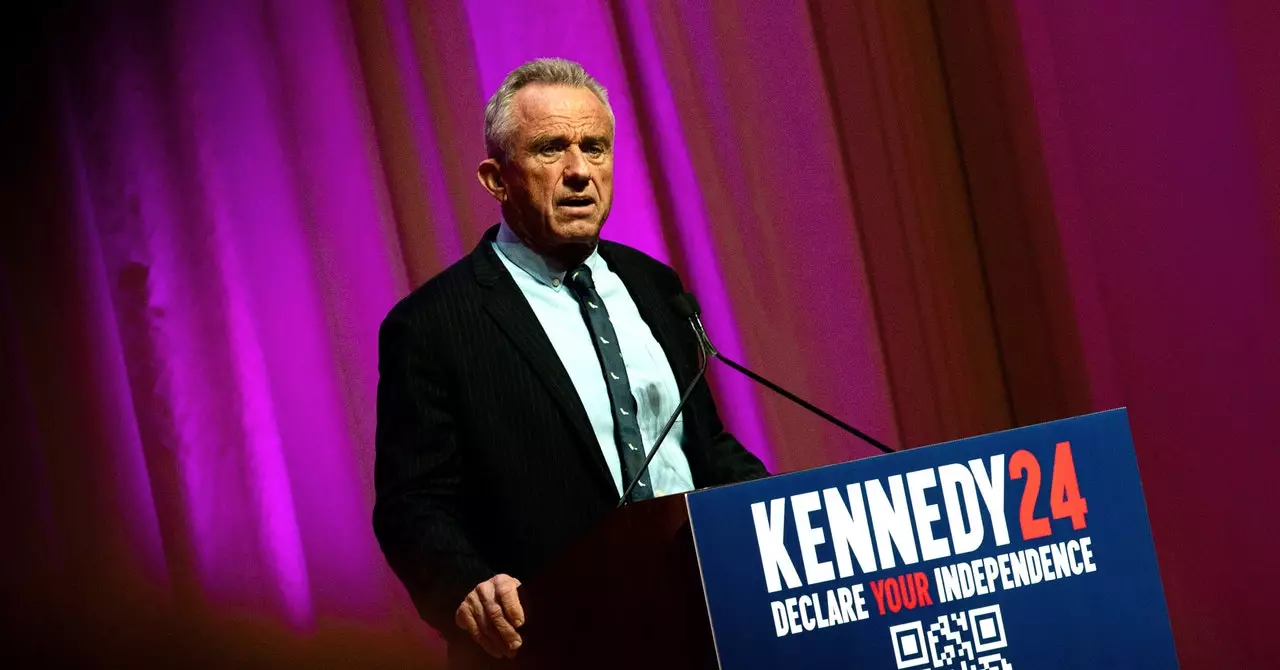

While some political campaigns have utilized chatbots without disclosing the underlying AI models powering them, investigations have revealed connections to companies such as LiveChatAI. LiveChatAI offers GPT-4 and GPT-3.5-powered chatbots for customer support, with references to ChatGPT capabilities. However, details about the specific AI models used by the Kennedy campaign’s chatbot remain undisclosed, invoking questions about transparency and accountability in AI-driven political messaging.

Microsoft’s massive investment in OpenAI has led to the integration of ChatGPT models into its services, including the Azure OpenAI Service. Despite OpenAI’s restrictions on electoral use of its models, the Kennedy campaign chatbot leverages Microsoft’s AI technology without facing any immediate repercussions. Microsoft emphasized the importance of guiding customers toward responsible AI usage to prevent misinformation, signaling a delicate balance between technological innovation and ethical considerations.

Instances like the Dean.bot chatbot, which mimicked a Democratic presidential candidate and provided questionable responses to voter inquiries, prompted OpenAI to take action and block the developer. The subsequent removal of the chatbot service underscored the challenges of monitoring AI applications in political settings. The disappearance of the Kennedy campaign chatbot, accompanied by a vague error message, hinted at potential regulatory pressures and ethical dilemmas surrounding AI-driven political communication.

The absence of explicit regulations governing the use of AI in political campaigning has created a gray area where tech companies like Meta, Microsoft, and Google have vague terms of service that do not directly address political applications. As political entities navigate the boundaries of AI technology for strategic messaging, the lack of comprehensive guidelines poses a risk of unchecked influence and misinformation. Scholars and experts have highlighted the discrepancy between AI providers’ stated restrictions and the ease of accessing powerful AI models for political purposes, suggesting a need for enhanced oversight and transparency measures.

The emergence of AI-powered chatbots in political campaigns reflects a broader societal challenge of balancing technological advancements with ethical responsibilities. While AI holds promise for enhancing communication and engagement, its deployment in sensitive domains like elections demands careful scrutiny and proactive regulation. As stakeholders grapple with the implications of AI-driven political messaging, the need for informed governance and ethical frameworks is more pressing than ever to uphold the integrity of democratic processes.

Leave a Reply