Nvidia’s CEO, Jensen Huang, recently revealed that the company’s next-generation graphics processor for artificial intelligence, known as Blackwell, will come with a hefty price tag ranging from $30,000 to $40,000 per unit. Huang highlighted the innovative technology behind the chip, emphasizing that Nvidia had to invest approximately $10 billion in research and development to bring Blackwell to life. This high price point indicates that Blackwell, which is anticipated to be in high demand for AI software training and deployment, will be priced similarly to its predecessor, the H100, from the “Hopper” generation.

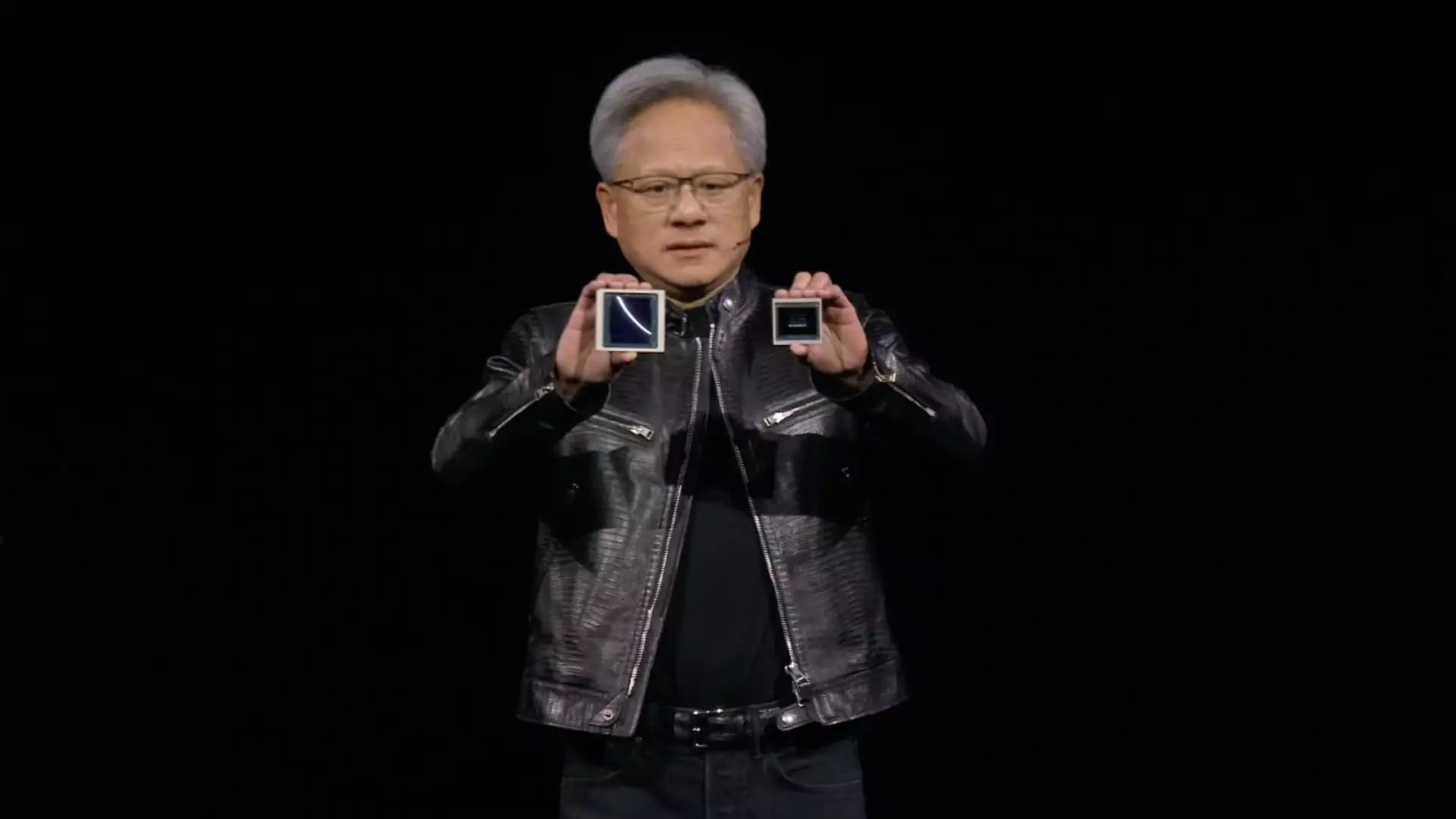

The introduction of the Hopper generation in 2022 marked a significant price increase for Nvidia’s AI chips compared to the previous generation. Nvidia typically unveils a new generation of AI chips every two years, with each iteration offering improved speed and energy efficiency. Blackwell, the latest addition to Nvidia’s lineup, comprises two chips and boasts a larger physical size compared to its predecessors. The company leverages the excitement surrounding a new chip release to drive orders for its GPUs.

Nvidia’s AI chips have played a pivotal role in driving a threefold increase in quarterly sales since the AI boom began in late 2022 with the announcement of OpenAI’s ChatGPT. Leading AI companies and developers, including Meta, have relied on Nvidia’s H100 GPUs for training their AI models. Meta, for instance, has disclosed plans to procure hundreds of thousands of Nvidia H100 GPUs to support its operations.

Nvidia maintains a level of secrecy regarding the list prices of its chips, which are available in various configurations. The ultimate price paid by end consumers, such as Meta or Microsoft, depends on factors like the volume of chips purchased and the procurement channel. Customers can acquire Nvidia chips directly from the company as part of a complete system or through third-party vendors like Dell, HP, or Supermicro, which assemble AI servers equipped with Nvidia GPUs.

In a recent announcement, Nvidia unveiled three distinct versions of the Blackwell AI accelerator: the B100, B200, and GB200. Each variant features unique memory configurations and is set to be released later this year. The GB200 model stands out for pairing two Blackwell GPUs with an Arm-based CPU, offering enhanced performance capabilities for AI applications.

Leave a Reply