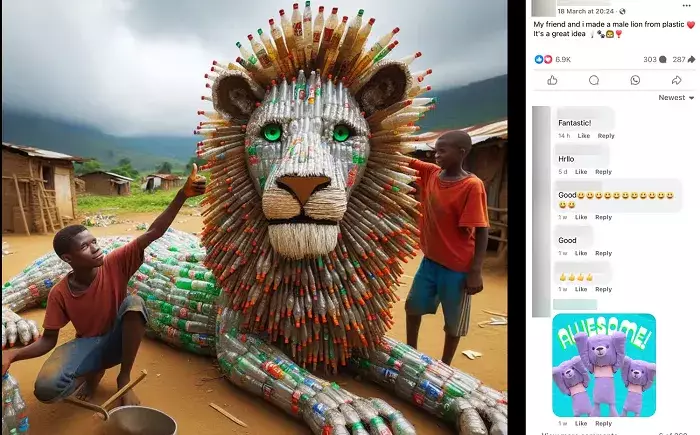

With the rise of generative AI posts flooding social media platforms like Facebook, Meta is taking steps to update its AI content labels to ensure a broader range of synthetic content is accurately tagged. This move comes in response to the growing prevalence of AI-generated content that is often misleading and deceptive to users.

Meta acknowledges that its existing approach to AI labeling is too narrow, as it primarily focuses on videos that are manipulated to make it appear as if a person is saying something they did not say. The company’s manipulated media policy, established in 2020, was created at a time when realistic AI-generated content was rare. However, with advancements in AI technology, people are now able to create realistic AI-generated content in various forms such as audio and photos. As a result, Meta is updating its policies to encompass a wider range of AI-generated content.

Implementation of “Made with AI” Labels

Under the new labeling process, Meta will append more “Made with AI” labels to content when industry standard AI image indicators are detected or when individuals disclose that they are uploading AI-generated content. The goal is to provide users with more information and context about the content they are viewing, allowing them to better assess its authenticity.

Meta’s decision to leave more AI generative content up on its platforms, accompanied by labels, aims to serve an informational and educational purpose. By clearly identifying content as AI-generated, users are immediately informed that the content is not real. This not only raises awareness about the capabilities of AI technology but also helps users recognize and identify synthetic content in the future.

While Meta’s updated approach to AI content labeling is a step in the right direction, it is not without its challenges. As AI systems continue to advance and evolve, detecting generative AI within posts may become increasingly difficult. This could potentially limit Meta’s automated capacity to flag deceptive content. However, by providing moderators with more enforcement powers, Meta aims to mitigate these challenges and improve detection capabilities.

The effectiveness of Meta’s new AI content labels remains to be seen, but it has the potential to significantly impact the use of AI-generated content on social media platforms. By raising awareness of the capabilities and risks associated with AI fakes, Meta’s initiative could lead to a more informed and discerning user base. As technology continues to advance, it is essential for platforms like Meta to stay vigilant and proactive in combating the spread of misleading and deceptive content.

Meta’s efforts to enhance the detection of synthetic content through updated AI labeling policies represent a positive step towards promoting transparency and authenticity on social media platforms. By providing users with more information and context about AI-generated content, Meta is empowering individuals to make informed decisions and navigate the digital landscape with greater awareness.

Leave a Reply