In the contemporary landscape of artificial intelligence, one might expect the evolution of robots to keep pace with impressive advancements in AI technology. However, the reality is starkly different. Despite recent breakthroughs, most robotic systems, especially those employed in factories and warehouses, operate under rigid constraints. These machines are programmed to execute repetitive tasks through meticulously pre-designated routines, lacking the cognitive ability to adapt or learn from their environments. This deficiency in what is known as “general physical intelligence” significantly limits their functionality; they can only perform a set number of actions with minimal dexterity, rendering them less effective than one might hope.

The excitement surrounding AI’s evolution has generated considerable optimism about the future of robotics. High-profile projects, such as Tesla’s development of the humanoid robot named Optimus, illuminate a potential pathway to achieving robots that can perform a broader spectrum of tasks in human environments. Musk has boldly estimated that this robot could be available at a price point of $20,000 to $25,000 by 2040, potentially capable of undertaking a diverse array of chores. While such anticipations are ambitious, they also underscore the substantial obstacles still to be overcome in endowing robots with the adaptability and versatility seen in humans.

Historically, efforts to teach robots have been somewhat myopic, often focusing solely on training machines for individual tasks, which hindered the possibility of knowledge transfer. However, there’s emerging research that suggests robots can learn from one another under particular conditions. A notable initiative from Google, named Open X-Embodiment, demonstrated the capability of sharing learned experiences among 22 distinct robots across 21 research labs, hinting at a future where collective knowledge could significantly enhance robotic capabilities.

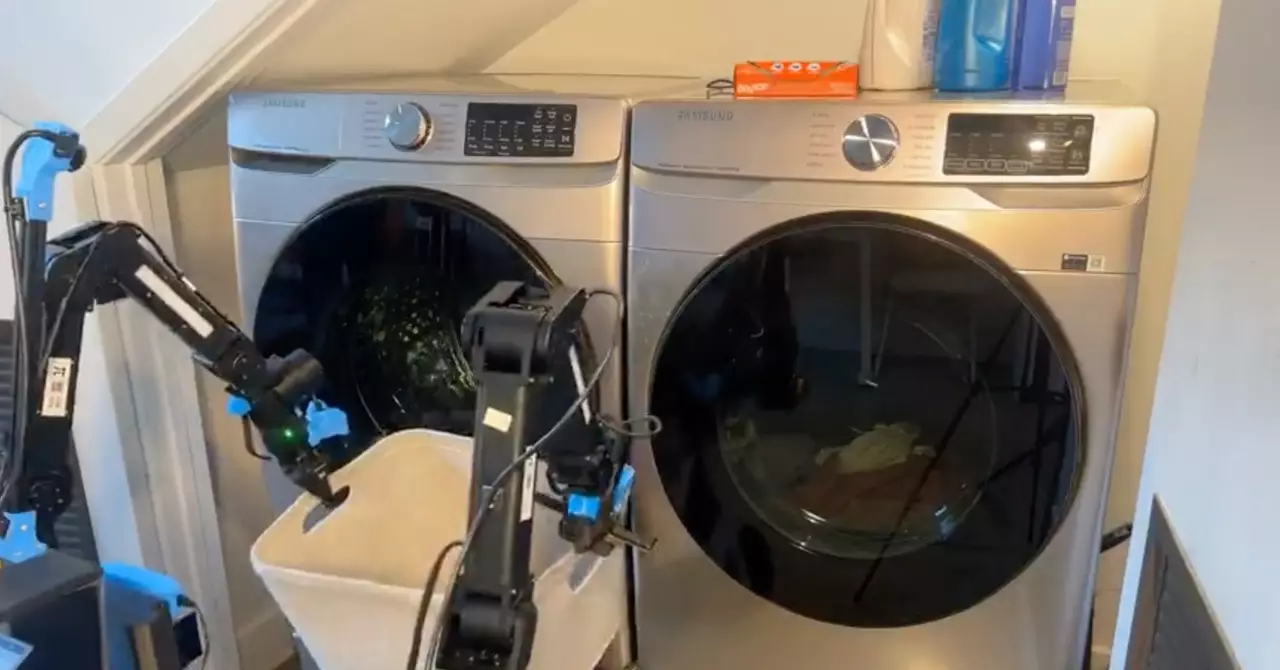

Despite this progress, a critical challenge persists: the data available for training robots pales in comparison to the abundant datasets leveraged for training large language models. This disparity presents a considerable hurdle for robotics companies. To reconcile this gap, companies like Physical Intelligence are compelled to generate bespoke training data and innovate methodologies that adapt learning within restricted parameters. Their efforts include merging vision-language models—capable of integrating visual input and textual information—with advanced diffusion modeling techniques, borrowed from AI-enhanced image generation. These strategies are designed to cultivate a more comprehensive form of learning, which is essential for enabling robots to tackle versatile tasks effectively.

As researchers and developers strive to evolve robotic systems, there remains a long journey ahead. While initial scaffolding exists to support future advancements, the reality is that robots still need extensive refinement before they can fulfill the aspirations of their creators. Achieving seamless interaction between humans and robots in everyday settings will demand monumental strides in both learning capabilities and adaptability. The quest for more intuitive and broadly capable robots continues, paving the way for innovations that are yet to come.

Leave a Reply