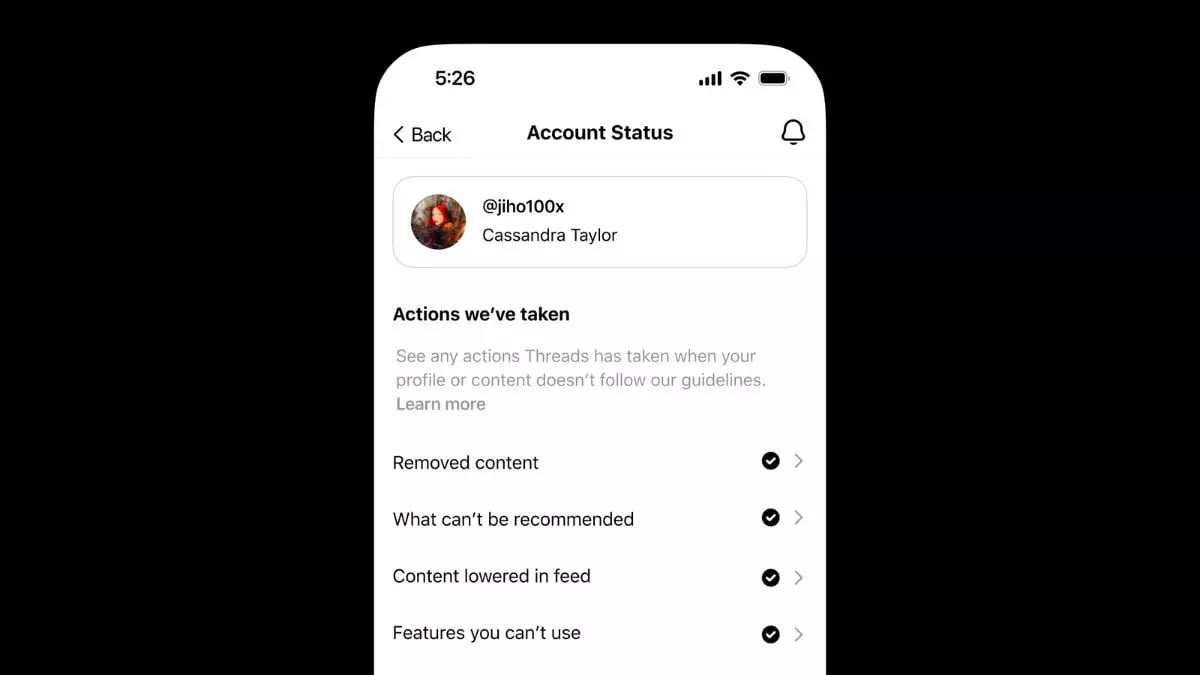

In a significant move to enhance user experience, Threads has unveiled its new Account Status feature, a tool designed to provide clear insights about user posts and profiles. Previously exclusive to Instagram, this feature offers Threads users a chance to understand the dynamics of content moderation on the platform. The capability to see the status of posts—whether they have been removed, demoted, or otherwise altered—gives users greater transparency regarding how their contributions fit within the community standards established by Meta.

Importance of Transparency in Moderation

The introduction of the Account Status feature signals a shift towards greater accountability in social media moderation. Historically, users have often felt frustrated by opaque moderation processes, leaving them in the dark about the reasons behind content removals. Threads is stepping into uncharted territory by actively addressing this concern. Users can now check for actions taken on their posts or replies, making the platform more user-centric. This transparency is not just about informing users; it empowers them to take action if they believe a moderation decision was unjustified.

The ability to file a report challenging moderation decisions can be crucial for users who seek a just and democratic platform. It offers a voice in a space that can sometimes feel dictated by algorithms rather than community values. In an era where social media engagement is increasingly scrutinized, Threads has recognized the need for a balanced approach that respects individual expression while adhering to community guidelines.

Navigating Content Moderation with Clarity

Threads delineates four specific actions that can be imposed on user posts and profiles: removal, demotion, de-prioritization in feeds, and restriction of features. Such categorization is essential because it highlights the nuanced understanding that Threads aims to foster around content moderation. Unlike platforms that might simply delete unwanted posts, Threads offers a spectrum of interventions that can help maintain a vibrant and respectful community.

Importantly, the feature acknowledges the role of artificial intelligence in shaping online experiences, reaffirming that these community standards apply universally and across all content types. This recognition underscores Threads’ commitment to evolving alongside technology and societal expectations.

The Interplay of Freedom and Safety

While the company asserts its commitment to freedom of expression, it also enforces boundaries meant to protect users’ dignity, privacy, and safety. Here lies a delicate balance that many social media platforms struggle to achieve: promoting open dialogue while limiting harmful expressions. Threads attempts to navigate this terrain by considering the public interest when assessing content that may violate guidelines. This approach not only reflects an understanding of the complexities of online interactions but also aligns with global human rights standards.

Moreover, the potential for content removal based on ambiguous language or insufficient context is a savvy strategy. It prompts users to think critically about their posts, encouraging a culture of mindfulness in communication. By potentially restricting posts that lack clarity, Threads is essentially inviting users to be more responsible in their expressions.

The launch of the Account Status feature could signify a more compassionate approach to social media interaction, one that values both accountability and the user’s right to express themselves.

Leave a Reply