In the world of artificial intelligence, the conversation has long been dominated by the behemoths of machine learning—large language models (LLMs) that boast hundreds of billions of parameters. These titanic models have proven their worth in various applications, ranging from chatbots to complex data analysis, but their robust capabilities come at a staggering price. The latest offerings from tech giants like Google and OpenAI have shown that true potential doesn’t always require vast resources; rather, ingenuity can spark a revolution in the form of small language models (SLMs).

While LLMs like Google’s Gemini 1.0 Ultra necessitate monumental investments—reportedly around $191 million for training—SLMs are challenging the status quo. They operate efficiently with a mere fraction of parameters, often around 10 billion. This not only reduces the computational burden but also makes them more accessible to a variety of platforms. The environmental implications are profound as well; LLMs can voraciously consume energy, with ChatGPT reportedly consuming ten times more energy per query than a simple Google search. In an era where sustainability is paramount, SLMs emerge as a beacon of hope, marrying functionality with eco-consciousness.

Small but Mighty: The Role of Specialization

One of the most compelling aspects of SLMs is their specialization. These models may lack the versatility of their larger cousins, but they excel in well-defined tasks such as summarizing dialogues or collecting data from smart devices. As reflected by the sentiments of Zico Kolter from Carnegie Mellon, these 8 billion-parameter models can perform remarkably well, often meeting or surpassing expectations for specific applications.

For example, a health care chatbot powered by an SLM can deliver concise and relevant answers to patients without the overhead of a massive, generalized model. This focused approach not only enhances performance but also represents a fascinating shift from the one-size-fits-all mentality that has dominated AI for so long. SLMs embody the principle that effective AI does not require colossal frameworks but can thrive within streamlined, specialized architectures.

Innovative Training Techniques: Knowledge Distillation and Pruning

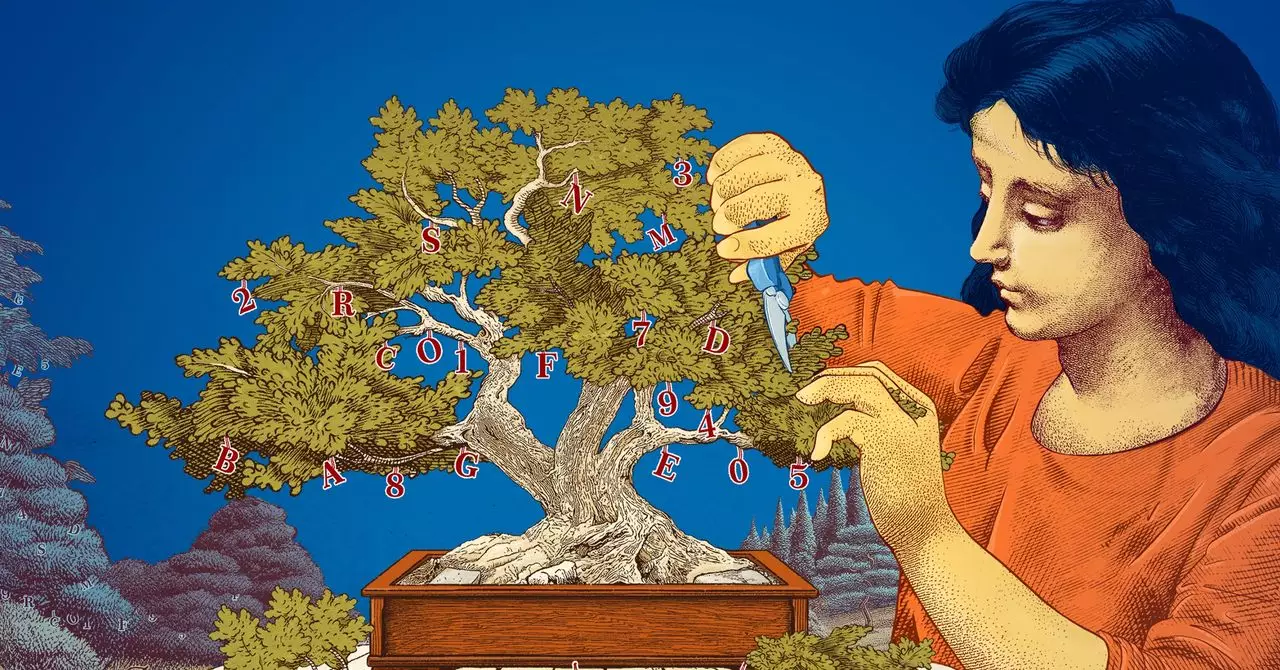

The development of SLMs also introduces novel training methodologies that further underscore their value. Knowledge distillation, a concept where larger models impart their learned intelligence onto smaller models, is a game changer. Through this technique, researchers can refine the training data, filtering out noise and retaining only the highest quality information. Much like how a mentor guides a novice, this method enables smaller models to benefit significantly from the depth of knowledge housed in their larger counterparts.

Additionally, techniques such as pruning have sparked renewed interest among researchers. Inspired by the efficiency observed in the human brain, pruning involves systematically removing redundant or non-essential parameters from a neural network. This method allows SLMs to operate with a more streamlined architecture while maintaining their functional capabilities. The 90% reduction in parameters proposed by Yann LeCun demonstrates the profound potential of this approach, offering not only efficiency gains but also clarity in model reasoning.

As Leshem Choshen from the MIT-IBM Watson AI Lab suggests, working with smaller models allows researchers to experiment with innovative ideas without the overwhelming stakes tied to larger models. This is where real advancement happens—in the fluid space of exploration, where researchers can test new concepts and tweak functionalities with lower risks involved.

Pragmatic Solutions for Real World Challenges

While LLMs will certainly continue to serve in roles requiring vast data handling and complexity, the emphasis on small language models offers a pragmatic solution to overlapping challenges in AI. For individuals and organizations looking to implement AI in specific contexts, the advantages of SLMs cannot be overstated. They promise significant savings in time, resources, and energy consumption, all while delivering results that are more than satisfactory for defined use cases.

As we pivot towards a more responsible and sustainable AI landscape, SLMs represent a forward-thinking path that prioritizes efficiency, accessibility, and the potential for groundbreaking innovation. The rise of small language models is not merely a trend; it is a necessary evolution in the field of artificial intelligence. This transformative shift challenges us to rethink our approaches, prioritize smart design, and embrace the capabilities of models that, while smaller, can deliver uncompromising performance.

Leave a Reply