The integration of artificial intelligence, particularly deep learning models, into various industries signifies a technological leap forward. Applications range from healthcare diagnostics to financial predictions, but the reliance on cloud computing essential for these models raises substantial security issues. This is especially pertinent in healthcare, where patient privacy is paramount, and any breach could not only leak sensitive information but undermine public trust in technology. To address these concerns, researchers at MIT have proposed a groundbreaking security protocol, intertwining quantum mechanics with deep learning, to ensure secure data interactions between users and cloud servers.

Deep learning models, such as those powering advanced predictive analytics in medicine and finance, necessitate considerable computational power. This often leads to the deployment of these models on cloud servers, which, despite their efficiency, heighten the potential for data breaches. In the context of sensitive information like medical records, the implication of insecurity can be dramatic. With hospitals and healthcare providers cautious about utilizing AI tools that could potentially compromise patient confidentiality, finding a solution that enables safe usage of these models is critical.

The MIT team’s endeavor to develop a security system that safeguards data by utilizing the unique properties of quantum light represents a significant innovation. The reliance on quantum mechanics as a basis for secure data transmission offers a way to protect sensitive information while still leveraging the capabilities of powerful deep learning algorithms. By ensuring that data sent to and from cloud servers remain secure, this framework promises to mitigate privacy concerns that plague cloud computing environments.

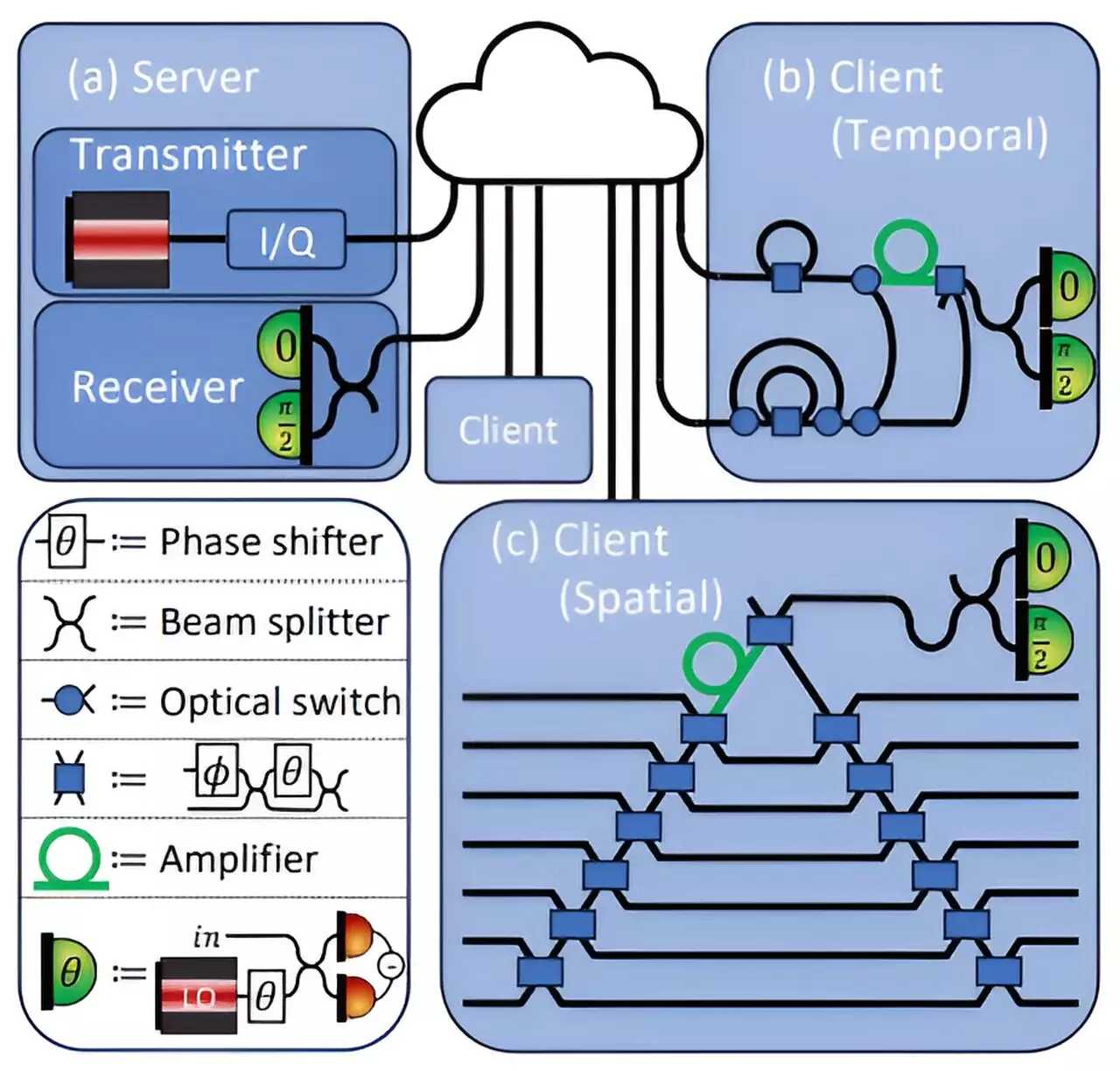

At the heart of this security protocol is the manipulation of quantum light. The researchers have devised a method for encoding deep learning model parameters into laser light transmitted via fiber optic communication systems. This quantum technique capitalizes on the no-cloning principle, which dictates that quantum data cannot be perfectly copied. As such, if an attacker attempts to intercept the information flowing between the client and server, any such attempt will be detectable, effectively enhancing the security framework.

In practical applications, this process involves the encoding of a neural network’s weights into an optical field, which are then utilized to perform computations without exposing sensitive information. The server communicates its model to a client seeking a predictive analysis, such as determining the likelihood of a cancer diagnosis based on medical imaging. However, the arrangement is designed to ensure that sensitive data stays concealed—neither party fully exposes its vital information to the other.

The fascinating aspect of this quantum protocol is its dual retention of data security and accuracy of the predictions. Tests conducted by the team have shown that the computational framework employed can maintain an impressive accuracy rate of 96%, making it a viable option for real-world applications without sacrificing performance.

In the broader context of digital security, achieving a robust methodology for protecting data exchanges stands to revolutionize industries reliant on sensitive information handling. The successful implementation of this quantum protocol offers a compelling argument for addressing privacy issues without compromising the utility of advanced AI systems.

The findings present not only a technological advance but hold significant implications for regulatory and ethical considerations regarding AI usage in sensitive areas. Secure frameworks will encourage the adoption of AI tools, especially in healthcare, potentially leading to innovations that save lives and enhance the precision of medical diagnostics.

As researchers explore the protocol for potential applications in federated learning—an approach where multiple data holders collaborate to train a centralized model—there exists promising opportunities for collective learning without compromising individual data privacy.

The groundwork laid by the MIT team provides an exciting glimpse into the future of secure machine learning applications. As security remains a pivotal concern for those developing and implementing AI technologies, the fusion of quantum cryptography with deep learning models serves as a pivotal remedy.

The research underscores the importance of bridging disparate fields such as deep learning and quantum security, which historically operated in silos. Its potential extends beyond just healthcare, reaching into financial services, autonomous systems, and any area where sensitive data is shared between parties.

Looking ahead, as experimental applications are tested further, there remain questions about how these protocols will scale and their resilience against real-world operational imperfections. Nevertheless, the innovative strides made to marry quantum mechanics with deep learning offer a profound edge in securing AI’s future while addressing resonating concerns regarding data privacy and integrity.

Leave a Reply