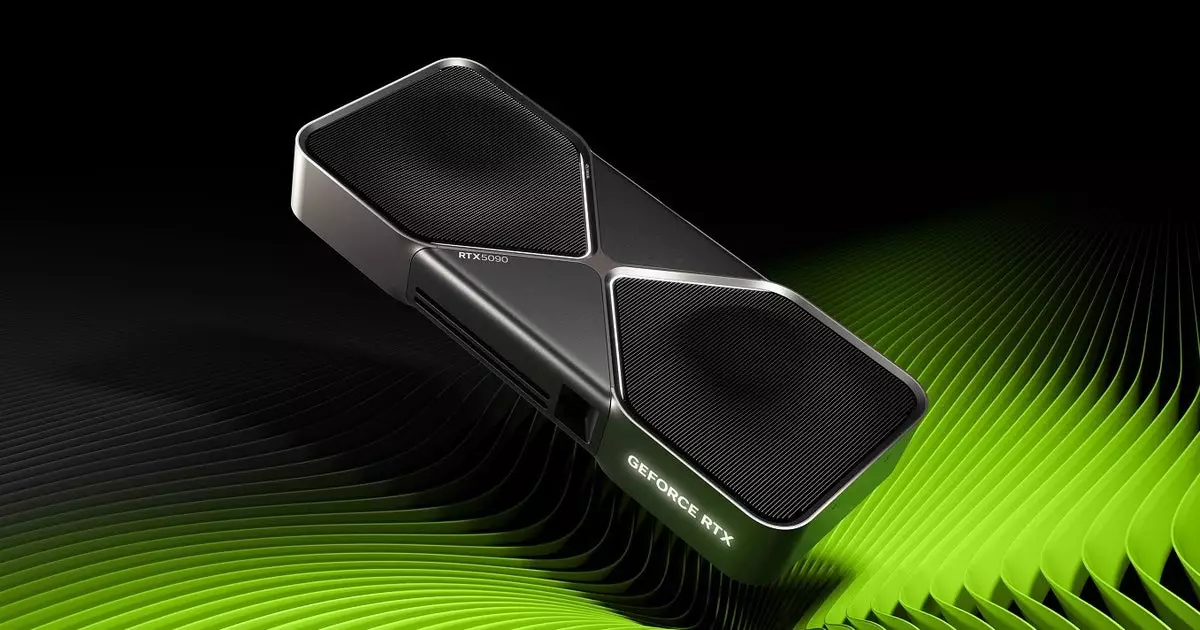

Last evening, the technology spotlight shone brightly on Nvidia as they revealed their highly anticipated RTX 50 series graphics cards during the annual CES event. While some in the gaming and tech communities were excited, I find myself grappling with skepticism, especially regarding the flagship RTX 5090. Priced at approximately £1939 / $1999 and demanding a staggering 575W of power, one has to wonder whether this GPU is a legitimate product or just a cleverly crafted marketing strategy designed to make the other models, like the RTX 5080, RTX 5070 Ti, and RTX 5070, appear more appealing by juxtaposition.

Jensen Huang, Nvidia’s charismatic CEO, delivered the keynote address in a bold snakeskin jacket—an image that, while striking, felt emblematic of Nvidia’s approach to marketing: flashy yet potentially misleading. What followed was a deep dive into the specifications of the new GPUs, which in most cases offered a respite from the staggering prices of their RTX 40 series counterparts. However, the introduction of the RTX 5090 raises red flags, especially with its bewildering price tag that seems outlandish amidst a market attempting to recover from the inflation of GPU costs.

The launch pricing strategy is telling. The RTX 4080 was released at £1269 / $1199, while the entry-level RTX 4070 hit the market at £589 / $599. In contrast, the models of the new RTX 50 series—aside from the RTX 5090—seem to sit competitively priced, drawing attention to a slightly less painful wallet impact for consumers. However, this could be a mere facade, distracting buyers from the fact that the RTX 5090’s exorbitant cost could set the tone for future pricing trends in the GPU market.

Interestingly, although both the RTX 5090 and RTX 5070 Ti include increased VRAM, all models are transitioning to faster GDDR7 memory. This technological leap raises questions regarding how essential such advancements are for gameplay, especially when many gamers are still grappling with the performance offered by earlier generations, like the RTX 4090. For instance, as the RTX 4090 handles demanding titles like “Indiana Jones and the Great Circle” without breaking a sweat, the necessity of an ultra-powerful RTX 5090 almost feels excessive given the reality of existing capabilities.

Speculation balloons around Nvidia’s introduction of DLSS 4, which promises an innovative “Multi-Frame Generation” feature. This ambitious technology hints at the potential for generating up to three AI-based frames for every one rendered frame—an enticing proposition for gamers. However, one must tread carefully, as this can also distort Nvidia’s benchmarks. By comparing the most robust versions of games utilizing DLSS 4 against older versions, the results may showcase inflated performance metrics rather than authentic gaming experiences.

Moreover, while additional frames inherently result in smoother visuals, they also introduce potential input lag. The complexity of rendering more frames might mean an average gamer could wait additional milliseconds to see the true impact of their mouse movements, which Nvidia claims to mitigate with their newly announced Reflex 2 technology. This tool aspires to decrease input lag by synchronizing the actions of the CPU and GPU more effectively, a welcome addition to the ecosystem but not without its own complications.

Reflex 2, set to debut alongside the RTX 5090 and RTX 5080 on January 30, emerges as a potential saving grace amid Nvidia’s ambitious—and arguably reckless—GPU rollout. With its proposed functions of predictive rendering and frame warping, it has the potential to minimize the perceptual lag gamers experience. Should the execution be flawless, it could significantly enhance gaming responsiveness, which is paramount for competitive play.

However, the immediate support for only a handful of games, such as “The Finals” and “Valorant,” raises concerns about the longevity and reach of this innovation. For many gamers, the practical benefits might remain out of reach until Reflex 2 is more widely adopted across platforms. As users flock to this technology, they could find themselves disappointed if support for legacy card models and popular titles fails to expand timely.

In the midst of all these developments lies a broader conversation about generative AI’s role in gaming—a subject that Nvidia has endeavored to piece together with their innovations. However, some implementations, such as the underwhelming “Co-Playable Character” concept presented with the Nvidia ACE toolkit, challenge the validity of generative AI’s application in gaming. Many enthusiasts question the value of creating subservient NPCs when the tech could be more effectively utilized to enhance gameplay mechanics.

While Nvidia makes significant strides with their RTX 50 series and accompanying technologies, there’s a duality to these advancements that merits critical reflection. The glitzy unveiling may dazzle the untrained eye, but for those invested in gaming, a cautious approach will likely yield the best results as they navigate this new territory.

Leave a Reply