In an era marked by rapid technological advancement and increased scrutiny over privacy, Meta Platforms, Inc. is trialing new security measures involving facial recognition. This initiative, while potentially beneficial for users and the platform, evokes fundamental questions about ethics, privacy, and the implications of leveraging such contentious technology once again.

One of the significant challenges in the digital landscape is the prevalence of scams that exploit the images of public figures. Scammers often employ these images—termed “celeb-bait”—to mislead individuals into clicking on fraudulent ads that could lead to spammy websites or worse. Meta’s new pilot program aims to combat this practice by utilizing facial recognition technology to match ad images with verified profile pictures of celebrities across Facebook and Instagram.

The methodology is straightforward: when a potentially fraudulent ad surfaces featuring a public figure, the ad’s imagery is compared to the celebrity’s existing profile images. If a match is confirmed, and the ad is deemed scammed, Meta will eliminate it promptly. The company has asserted that all facial data generated will not only be fleeting but also purged immediately after use, reinforcing their commitment to privacy.

However, although these intentions may seem sound, they come after a history of stumbling over privacy issues. Meta has previously faced considerable backlash over its use of facial recognition technology, leading to the complete shutdown of this feature on Facebook in 2021. Revisiting facial recognition raises an alarming question: Can Meta regain user trust and demonstrate a commitment to ethical practices surrounding the use of biometrics?

Meta’s approach comes against a backdrop of concerning use cases involving facial recognition technology worldwide. For example, in some countries, the technology has been controversially employed for purposes such as monitoring citizens in public spaces, punishing individuals for civil infractions like jaywalking, or worse, targeting ethnic groups for surveillance. These scenarios inevitably fuel skepticism regarding how such tools can be deployed safely and ethically.

The specter of misuse looms large, inviting a discussion about regulation and accountability. Western regulators are increasingly aware of the potential hazards that facial recognition can pose, which has influenced Meta’s previous decisions to retreat from this domain. With this renewed trial, concerns arise that history might repeat itself, amplifying scrutiny over how such technology is utilized and could evolve.

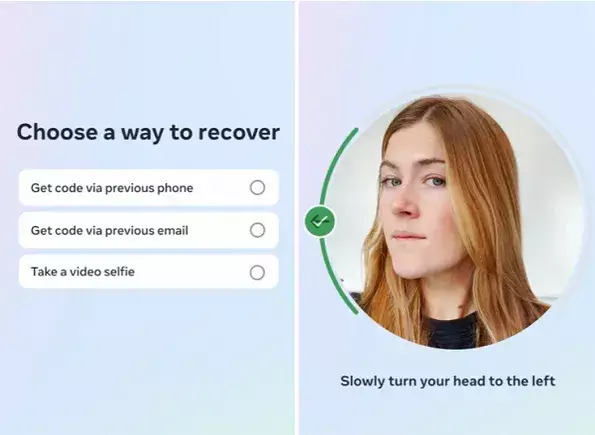

Beyond tackling scams, Meta is also exploring the use of video selfies for verifying user identities, particularly for account recovery in cases of unauthorized access. Users would upload video selfies that Meta’s algorithms would analyze to authenticate identity based on existing profile images.

While this method may enhance security and mimic familiar practices from popular mobile devices or other applications, it raises important ethical and procedural issues. Users may be inclined to trust an organization with their biometric data only if clear protocols for data handling and retention are established. Meta explicitly states that all uploaded videos will be securely encrypted and never visible to anyone outside the purview of verification. Still, the question lingers: can users genuinely believe that these measures will be strictly upheld?

Moreover, the integration of facial recognition for identity verification reflects a larger trend in tech companies utilizing similar methods as standard security practices. While one could argue that this offers a layer of security, it is equally essential to explore what happens should these systems fail or be compromised, and whether relying on biometric verification could exacerbate the issues surrounding data vulnerability.

The conversations Meta now instigates through these implementations confront the complex dichotomy of security and privacy. Enhanced security measures are indeed warranted in the face of rising fraud and cyber threats, yet they should not come at the expense of user privacy or consent. The evaluation of whether this use of facial recognition can be justified hinges on the transparency of Meta’s processes and the degree to which users can trust the company to protect their sensitive data.

While some may scoff at the notion of user trust being pertinent given Meta’s history, it remains vital for the company to foster an environment where users feel comfortable and secure. The challenge lies not merely in developing technology that can identify threats or protect users but in articulating a clear framework of accountability, ethical considerations, and an earnest dedication to user privacy.

While Meta’s foray into facial recognition technology with scams and account recovery solutions promises to enhance user security, it also introduces substantial ethical considerations and privacy concerns. As the company navigates these uncharted waters, it must balance its ambitions with a commitment to ensure that user trust is not only preserved but strengthened. The road ahead will undoubtedly be challenging, but it is one that Meta must tread carefully to avoid past pitfalls.

Leave a Reply