The rise of large language models (LLMs), such as GPT-4, marks a significant milestone in the field of artificial intelligence. These models have reshaped various domains, from creative writing to customer service automation. As they evolve, researchers are continuously investigating how these systems comprehend language. A noteworthy piece of research highlights an intriguing phenomenon known as the “Arrow of Time” effect, which fundamentally alters our understanding of how these models process language sequences. Through the insights gained from this research, we uncover the implications of this bias for both AI development and our understanding of language itself.

The Forward Prediction Advantage

The foundational operation of LLMs involves predicting the next word in a sequence based on the prior context. This forward-looking predictive capability is not merely intuitive; it is what enables their impressive functionalities. However, a burning question arises: how do these models fare when tasked with predicting the preceding word from a given set of subsequent words? This inquiry painted the backdrop for a collaborative exploration led by Professor Clément Hongler from EPFL and Jérémie Wenger from Goldsmiths (London), which revealed a surprising asymmetry in LLM performance.

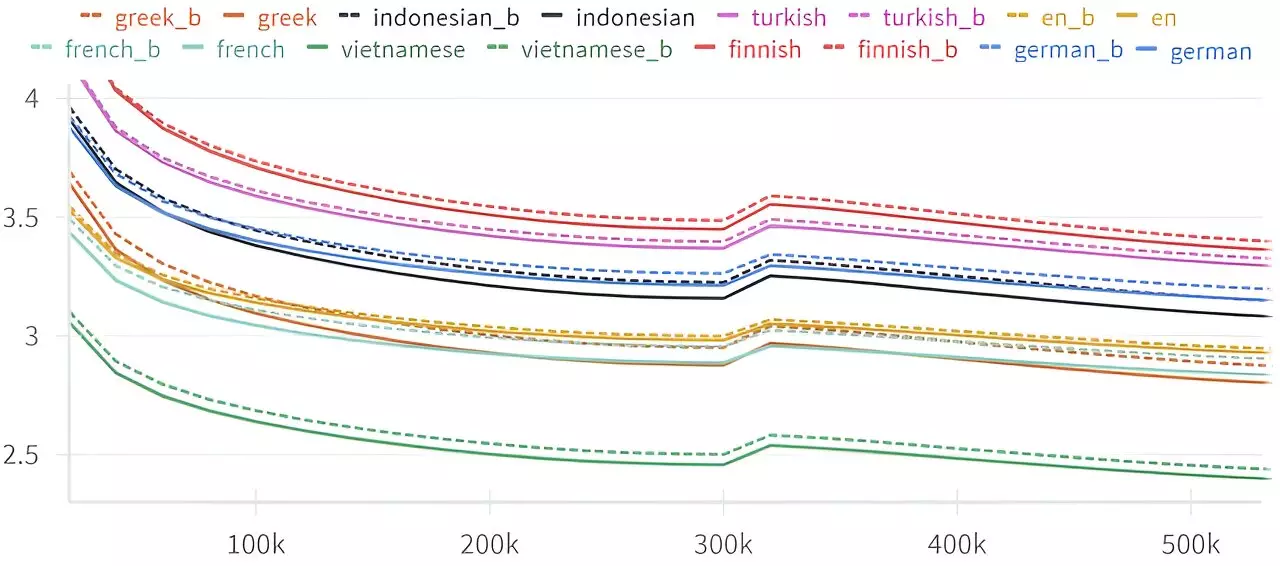

In essence, the team found that when LLMs attempt to “rewind” and predict backward, their accuracy diminishes. This “Arrow of Time” bias was consistently observed across various models, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) networks. Each model exhibited a slight but significant reduction in performance when predicting backwards compared to forwards, suggesting a fundamental limitation in how these AI systems process language.

The researchers’ findings illuminate a deeper truth about language processing: while LLMs can adeptly predict upcoming words, the same cannot be said for their understanding of the past. This phenomenon was echoed in the historical work of Claude Shannon, a pioneer in information theory, who discovered that humans often find it more challenging to predict previous letters in a sequence despite theoretical parity in difficulty. The revelation that LLMs exhibit a similar trend hints at an intrinsic aspect of language itself, suggesting that the structure of language may be more aligned with a forward temporal flow.

The broad implications of these insights extend beyond just AI capabilities; they touch on concepts of intelligence and causality within language. If we accept that LLMs’ processes are time-sensitive, it opens a door to consider how we may define and recognize intelligence. Can the ability to effectively process language in both temporal directions inform our understanding of consciousness? Furthermore, this time-oriented processing may have relevance in new AI developments, potentially guiding the creation of models that mitigate this backward prediction disadvantage.

The link between LLMs and the passage of time not only presents exciting new research avenues in AI but also contributes to our understanding of significant physical concepts. By probing this relationship, researchers could uncover fresh perspectives on temporal emergence in physics. The notion of causality, for example, intertwines with both language and time; understanding how language conveys sequences of events is crucial to how we interpret reality itself.

The seed of this research was planted in a theatrical collaboration aimed at enhancing improvisational storytelling through chatbot technology. By training LLMs to construct narratives backwards, the researchers not only fulfilled a creative requirement but inadvertently stumbled upon a phenomenon with profound implications. It reflects the serendipitous nature of scientific discovery, where an early application gives birth to extensive theory.

Conclusion: Reflections on AI’s Evolving Landscape

As our understanding of large language models grows, it’s vital to critically engage with findings like those of the “Arrow of Time.” By revealing asymmetries in how AI processes language, we bring forth essential questions about the interplay between cognition, temporal dynamics, and the very nature of language. This ongoing dialogue between AI and human cognition will not only refine the models we build but may also compel us to rethink our frameworks for understanding intelligence and causality. The journey is just beginning, as the implications of this research could hold transformative potential for both AI development and our grasp of the universe.

Leave a Reply