The ongoing debate surrounding generative AI and its ethical implications has prompted Meta to collaborate with Stanford’s Deliberative Democracy Lab to organize a community forum. This forum sought to gather feedback from over 1,500 participants from Brazil, Germany, Spain, and the United States regarding their perceptions and concerns about responsible AI development.

The findings from the forum revealed that a majority of participants from each country believe that AI has had a positive impact. Additionally, there was consensus on the idea that AI chatbots should be allowed to utilize past conversations to enhance their responses, provided that users are informed. Surprisingly, many individuals also expressed the belief that AI chatbots can exhibit human-like qualities, as long as transparency is maintained.

Interestingly, the report highlighted variations in the responses based on the geographical location of the participants. While some opinions on AI benefits and risks were consistent across regions, there were notable differences in the specific aspects that received positive and negative feedback. These regional disparities shed light on the diverse perspectives on AI development.

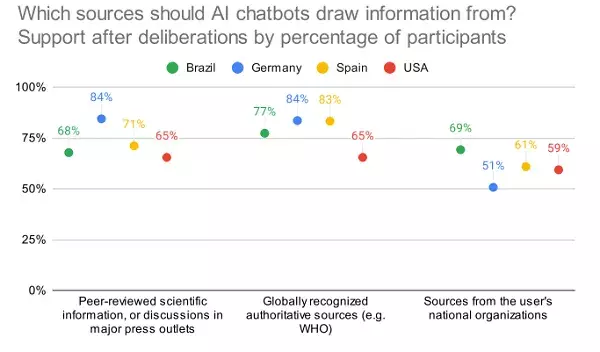

The study also delved into consumer attitudes towards AI disclosure and the sources from which AI tools derive their information. It is worth noting the skepticism expressed by participants in the United States towards certain sources of AI information. This indicates a need for enhanced transparency and accountability in AI development.

In addition to the public perceptions of AI, the forum also touched upon ethical considerations in AI development. One intriguing point of discussion was whether users should be allowed to engage in romantic relationships with AI chatbots. While this may seem unconventional, it raises important questions about the ethical boundaries of AI technology.

The recent controversies surrounding AI models, such as Google’s Gemini system and Meta’s Llama model, underscore the importance of implementing ethical guidelines in AI development. These incidents reveal the significant impact that AI models can have on the outcomes they produce. Questions arise about the extent to which corporations should control AI tools and whether regulatory measures are necessary to ensure fairness and accuracy.

As the capabilities of AI technology continue to evolve, it becomes imperative to establish universal guardrails to safeguard users against misinformation and biased outcomes. While many questions about AI development remain unanswered, it is evident that regulatory frameworks are essential to maintain integrity and fairness in AI tools. The ongoing debate on these issues highlights the complexity of AI ethics and underscores the importance of thoughtful considerations in shaping the future of AI development.

Leave a Reply