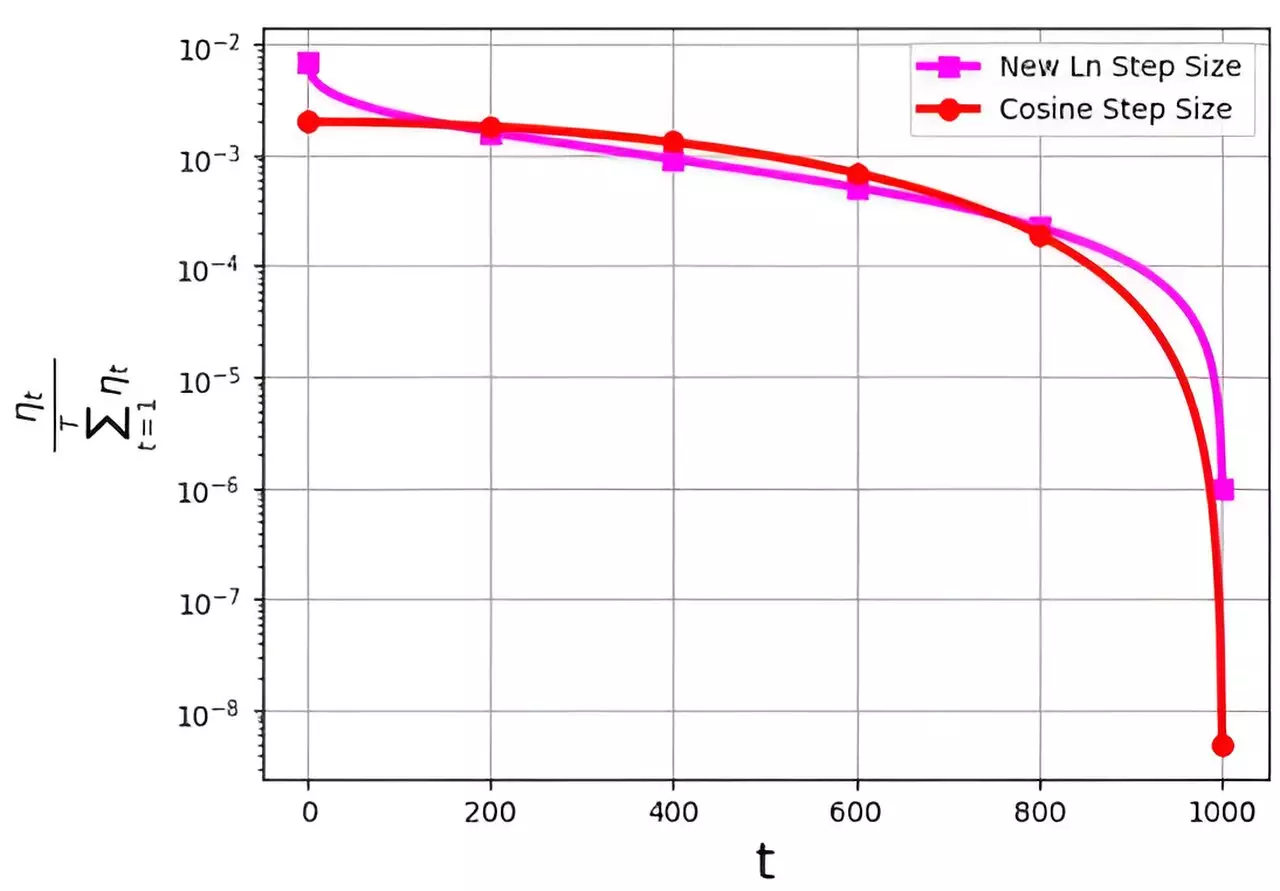

In the realm of optimizing the efficiency of the stochastic gradient descent (SGD) algorithm, the step size, also known as the learning rate, is a critical factor. Recent advancements have led to the development of various step size strategies aimed at improving the performance of SGD. However, one of the key challenges associated with these strategies lies in their probability distribution, denoted as ηt/ΣTt=1ηt. This distribution often faces issues with assigning excessively small values to the final iterations.

Research Innovation

A groundbreaking study led by M. Soheil Shamaee, published in Frontiers of Computer Science, introduces a novel approach to address this challenge. The research team proposes a logarithmic step size for the SGD method, which has shown remarkable effectiveness in the concluding iterations. Unlike the traditional cosine step size, which struggles with assigning low probability distribution values to the final iterations, the logarithmic step size excels in this critical phase of the optimization process.

The experimental findings presented in the study highlight the superior performance of the new logarithmic step size on datasets such as FashionMinst, CIFAR10, and CIFAR100. Notably, when combined with a convolutional neural network (CNN) model, the logarithmic step size yielded a notable 0.9% increase in test accuracy for the CIFAR100 dataset. These results underscore the significant impact of the logarithmic step size on enhancing the efficiency and accuracy of the SGD algorithm.

The introduction of the logarithmic step size opens up new possibilities for improving the convergence and performance of gradient descent optimization techniques. Future research endeavors could focus on further refining and optimizing the logarithmic step size approach to achieve even greater enhancements in efficiency and accuracy across a diverse range of datasets and neural network models. The findings of this study pave the way for continued innovation in the field of optimization algorithms and machine learning.

Leave a Reply