Cognitive flexibility, the ability to quickly switch between different thoughts and mental concepts, is a valuable human capability. This ability enables multitasking, rapid skill acquisition, and adaptation to new situations. While artificial intelligence (AI) systems have advanced in recent decades, they still lack the flexibility seen in humans. Researchers are now exploring how neural circuits in the brain support cognitive flexibility, particularly in multitasking, in order to improve the flexibility of AI systems.

A research group from New York University, Columbia University, and Stanford University made a breakthrough in 2019 by training a single neural network to perform 20 related tasks. Their study, published in Nature Neuroscience, aimed to understand how this network was able to handle multiple tasks through modular computations. Laura N. Driscoll, Krishna Shenoy, and David Sussillo emphasized the importance of flexible computation in intelligent behavior and highlighted the need to study how neural networks adapt to different computations.

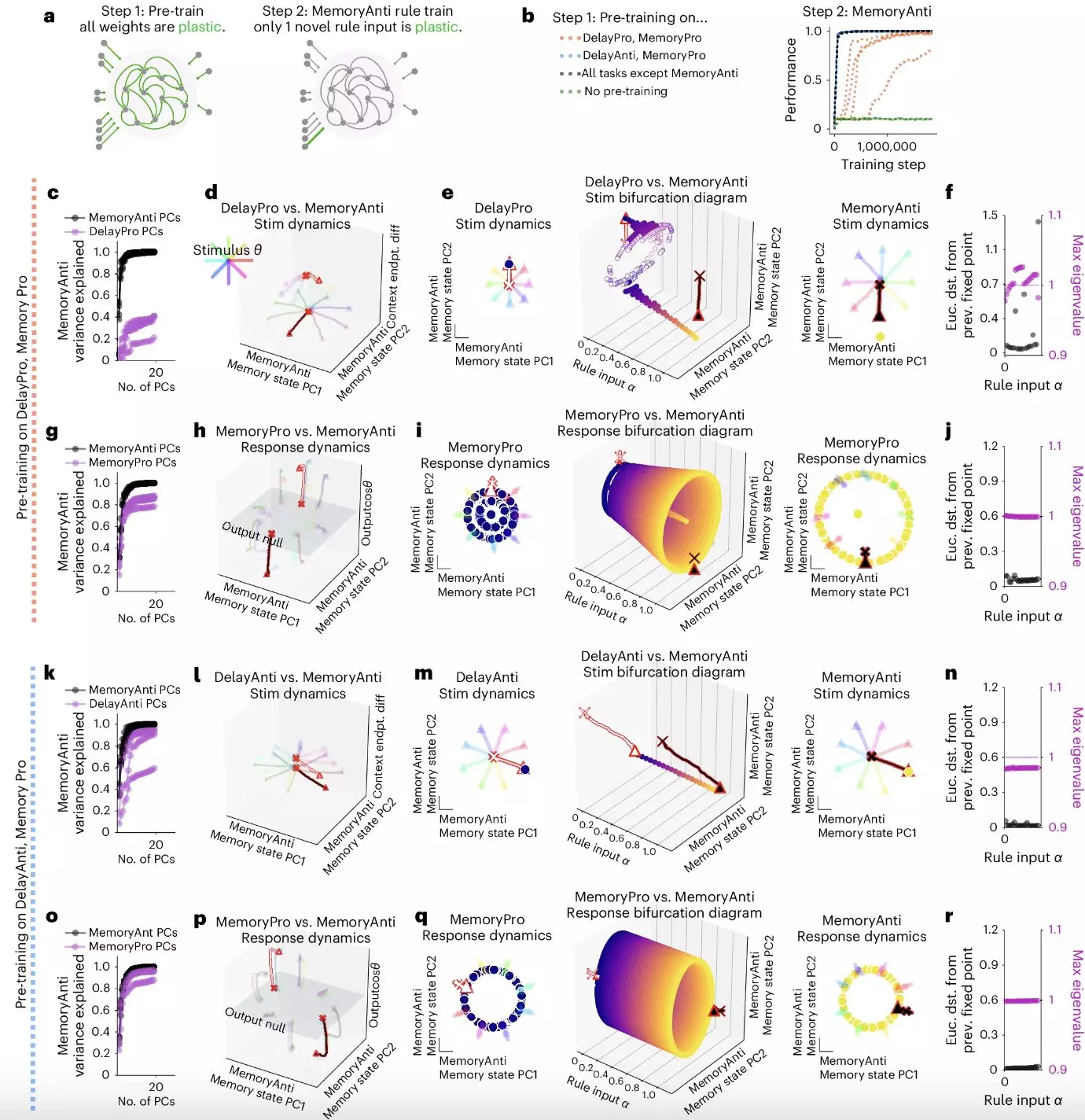

The researchers investigated the mechanisms of recurrently connected artificial neural networks to uncover a computational substrate that enables modular computations. They introduced the concept of “dynamical motifs” to describe recurring patterns of neural activity that facilitate specific computations. Analysis of convolutional neural networks showed that dynamical motifs are implemented by clusters of units with positive activation functions. Lesions to these units were found to impair the networks’ ability to perform modular computations, emphasizing the significance of these motifs.

The study revealed that motifs were reconfigured for fast transfer learning after an initial learning phase. This work established dynamical motifs as fundamental units of compositional computation, bridging the gap between individual neurons and network behavior. As researchers continue to investigate whole-brain activity in specialized systems, the dynamical motif framework will guide inquiries into specialization and generalization.

The research by Driscoll, Shenoy, and Sussillo offers insights into the computational substrates of convolutional neural networks that enable them to tackle multiple tasks effectively. The findings of this study have implications for both neuroscience and computer science research, potentially leading to a better understanding of neural processes related to cognitive flexibility. By applying these insights to artificial neural networks, researchers may develop new strategies to enhance the flexibility and adaptability of AI systems.

Leave a Reply