The landscape of deep learning techniques has evolved significantly over the past few years, achieving remarkable milestones in image classification and natural language processing. This progress has prompted researchers to explore new avenues in hardware development to support the computational demands of deep neural networks. Among the novel solutions being investigated are hardware accelerators, which are specialized computing devices designed to efficiently handle specific computational tasks, surpassing the capabilities of traditional CPUs. Although the development of hardware accelerators has largely been conducted independently from deep learning models, a recent study by researchers at the University of Manchester and Pragmatic Semiconductor is pushing the boundaries with the introduction of “tiny classifiers.”

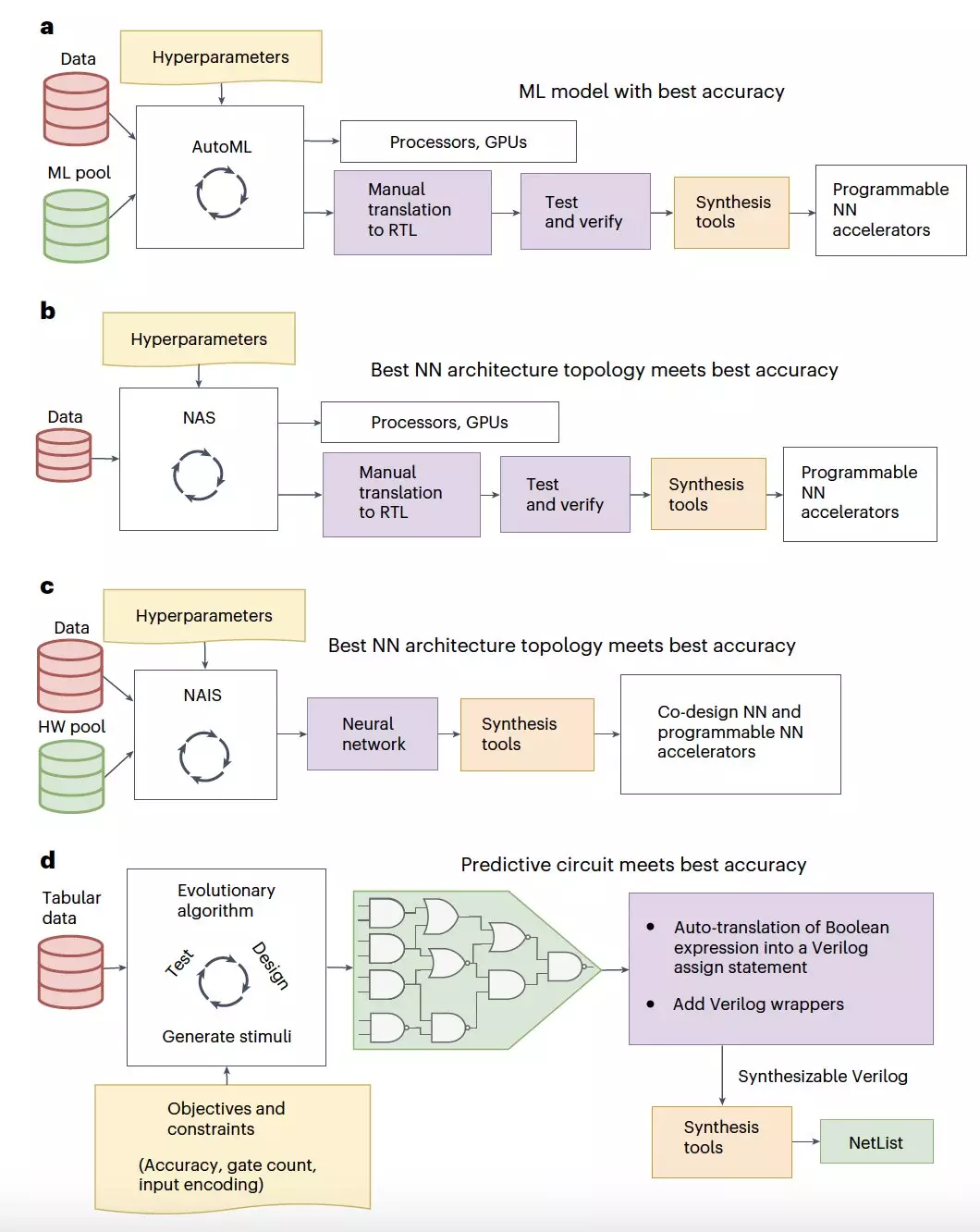

The team led by Konstantinos Iordanou and Timothy Atkinson has proposed a machine learning-based approach to generate classification circuits from tabular data, a form of unstructured data that combines numerical and categorical information. In their paper published in Nature Electronics, the researchers highlight the limitations faced when scaling machine learning models and the need to optimize memory and area footprint for deployment on various hardware platforms. The “tiny classifiers” developed by the team consist of a few hundred logic gates, delivering accuracy comparable to state-of-the-art machine learning classifiers but with significantly reduced hardware resources and power consumption.

Iordanou, Atkinson, and their colleagues utilized an evolutionary algorithm to search through the logic gate space and automatically generate classifier circuits with optimized training prediction accuracy, limiting the circuit size to no more than 300 logic gates. Through simulations and real-world validation on a low-cost integrated circuit, the tiny classifiers demonstrated exceptional performance metrics. In simulations, the tiny classifiers exhibited 8–18 times less area usage and 4–8 times less power consumption compared to the best-performing machine learning baseline. Implementing the tiny classifiers on a low-cost chip on a flexible substrate showed even more significant improvements, with 10–75 times less area occupancy, 13–75 times less power consumption, and 6 times better yield than existing hardware-efficient ML baselines.

The development of tiny classifiers opens up a realm of possibilities for real-world applications across various industries. These ultra-efficient circuits could be integrated as triggering mechanisms in chip-based systems for smart packaging and monitoring of goods, as well as in the creation of low-cost near-sensor computing systems. By harnessing the power of tiny classifiers, researchers and industry professionals can explore innovative solutions for a diverse set of challenges, paving the way for the next generation of hardware accelerators in deep learning.

Overall, the work conducted by Iordanou, Atkinson, and their team represents a significant advancement in the field of hardware accelerators for deep learning. The introduction of tiny classifiers showcases the potential for achieving high levels of accuracy with minimal hardware resources, opening up new opportunities for efficient and cost-effective implementation of machine learning models. As the research progresses, it will be exciting to see how these tiny classifiers continue to shape the future of deep learning and accelerate the development of smart and sustainable technologies.

Leave a Reply