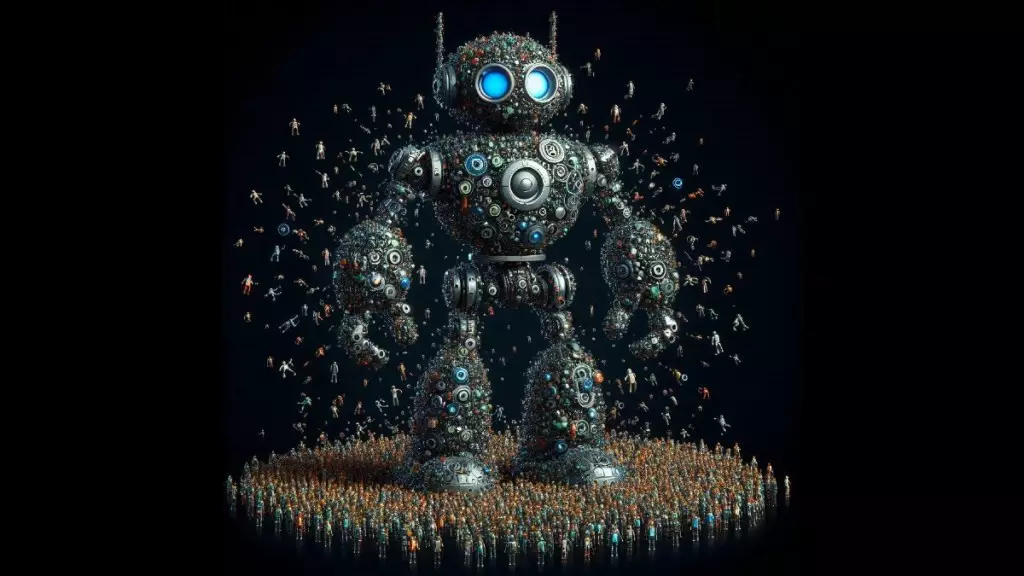

Mixture-of-Experts (MoE) has been widely adopted as a technique for scaling large language models (LLMs) while keeping computational costs in check. By utilizing specialized “expert” modules, MoE allows LLMs to increase their parameter count without a significant increase in inference costs. While current MoE techniques have been effective, they are limited in the number of experts they can accommodate. In a new study, Google DeepMind introduces Parameter Efficient Expert Retrieval (PEER), a groundbreaking architecture that can scale MoE models to millions of experts, improving the overall performance-compute tradeoff for large language models.

The Problem with Scaling Language Models

Increasing the parameter count of language models has been shown to enhance performance and capabilities. However, there are limitations to how much a model can be scaled before encountering computational and memory bottlenecks. The attention and feedforward (FFW) layers in transformer blocks used in LLMs are crucial components. The FFW layers, which store the model’s knowledge, contain a large portion of the model’s parameters and are a bottleneck for scaling transformers. In traditional transformer architectures, all parameters of the FFW are used during inference, leading to a direct correlation between computational footprint and size. MoE addresses this challenge by replacing the FFW with sparsely activated expert modules that specialize in specific areas, significantly reducing the computational cost.

MoE architecture utilizes a router to allocate input data to expert modules, ensuring the most accurate answer is provided. By increasing the number of experts, MoE can enhance the capacity of LLMs without increasing computational costs significantly. Studies have shown that the optimal number of experts in an MoE model depends on factors such as training tokens and compute budget, with MoEs consistently outperforming dense models under similar resource constraints. Increasing the “granularity” of an MoE model, by adding more experts, leads to performance gains, particularly when coupled with larger models and training data. High-granularity MoE can facilitate more efficient learning of new knowledge and adaptation to continuous data streams.

The Innovation of Parameter Efficient Expert Retrieval

Traditional MoE approaches are limited and challenging to scale, often requiring adjustments when new experts are added. PEER introduces a novel architecture that overcomes these limitations by replacing fixed routers with a learned index to efficiently route input data to a vast pool of experts. PEER utilizes tiny experts with a single neuron in the hidden layer, allowing for the sharing of hidden neurons among experts, enhancing knowledge transfer and parameter efficiency. By employing a multi-head retrieval approach, PEER can handle a large number of experts without compromising speed. The architecture can be seamlessly integrated into existing transformer models or used to replace FFW layers, advancing the field of parameter-efficient fine-tuning techniques.

The introduction of PEER has significant implications for the scalability and performance of large language models. By enabling the scaling of MoE models to millions of experts, PEER demonstrates a superior performance-compute tradeoff compared to traditional dense FFW layers. Experiments have shown that PEER models achieve lower perplexity scores with the same computational budget, with further reductions achieved by increasing the number of experts. This breakthrough challenges the notion that MoE models have a peak efficiency with a limited number of experts, offering new possibilities for reducing the complexities of training and serving very large language models.

Parameter Efficient Expert Retrieval (PEER) represents a pivotal advancement in the field of large language models, enabling the scaling of MoE models to millions of experts while enhancing performance and efficiency. The innovative architecture of PEER demonstrates the potential to revolutionize the way we approach the development and deployment of large language models.

Leave a Reply