The realm of artificial intelligence (AI) has seen an influx of innovations, with various startups attempting to carve a niche in a field dominated by heavyweight players. One such emerging player is DeepSeek, a Chinese startup that has made waves with its latest offering, the DeepSeek-V3 model. With its impressive parameter count and multi-faceted architecture, DeepSeek-V3 stands as a testament to the growing capabilities of open-source AI technologies. This article delves into the distinguishing features of DeepSeek-V3, its implications for the AI industry, and what it means for the future of artificial general intelligence (AGI).

At the heart of DeepSeek-V3’s design is its mixture-of-experts architecture, utilizing an astonishing 671 billion parameters. However, unlike traditional models that rely on activating all parameters for every task, DeepSeek-V3 selectively engages only the parameters necessary for specific tasks. This targeted engagement facilitates not just efficiency but also precision, particularly crucial in sophisticated applications that demand nuanced reasoning and comprehension.

The architecture, which is an evolution from its predecessor DeepSeek-V2, employs multi-head latent attention (MLA) alongside DeepSeekMoE technology. This approach allows the model to maintain effective training and inference speeds while processing vast expanses of data. What sets DeepSeek-V3 apart is its revolutionary load-balancing strategy that dynamically adjusts resource allocation among the ‘experts’—essentially smaller networks within the larger framework—ensuring optimal performance without overburdening individual components.

DeepSeek has introduced two groundbreaking features with the release of DeepSeek-V3: an auxiliary loss-free load-balancing strategy and a multi-token prediction (MTP) mechanism. The former mitigates potential bottlenecks during operation, while the latter enhances the model’s ability to predict multiple future tokens in a single pass. This advance not only accelerates processing speeds—enabling it to generate up to 60 tokens per second—but also contributes significantly to training efficiency. As a result, the model operates three times faster compared to earlier iterations.

Pre-training encompassed an extensive dataset, tapping into 14.8 trillion tokens to enhance the model’s capabilities. Post-training refinements, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL), aligned the model with human preferences, accentuating its practical applicability. The incorporation of diverse training methodologies introduces further depth, fostering the model’s development towards achieving AGI—a dream for many in the AI community.

DeepSeek-V3’s training process underscores an impressive inefficiency in resource usage. Utilizing advanced hardware optimizations like FP8 mixed precision training and the DualPipe algorithm for pipeline parallelism, the total cost for completing the training was around $5.57 million. This figure is significantly lower than the exorbitant expenses typically associated with training large language models, such as the reported $500 million investment for Meta’s Llama-3.1.

This economical training strategy has not hindered DeepSeek-V3’s performance. Benchmarks reveal that it stands tall among its competitors, outperforming notable open-source models and rivaling even more established models from Anthropic and OpenAI. The model’s impressive accuracy, particularly in specialized languages and mathematical tasks, illustrates its robustness and versatility, establishing it as a formidable contender in the AI landscape.

The emergence of DeepSeek-V3 represents a critical milestone in the quest for open-source AI capabilities that can rival established closed-source models. As the competition between closed and open-source models intensifies, it has the potential to democratize AI technology, offering enterprises a wider range of options and fostering innovation through accessibility. DeepSeek’s decision to publish its code on GitHub under an MIT license showcases its commitment to community collaboration and further reinforces the importance of open-source technologies in shaping future advancements.

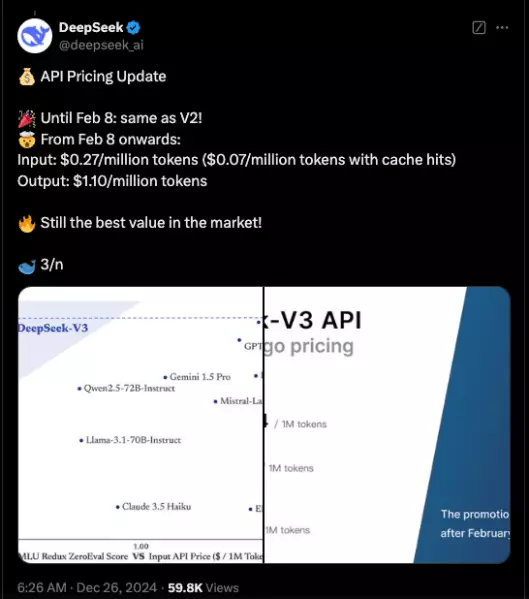

Moreover, with the introduction of platforms like DeepSeek Chat, which allows users to interact with the model, the barriers for enterprises seeking to implement advanced AI systems are further lowered. DeepSeek’s pricing model also exemplifies a forward-thinking approach, making high-performance AI accessible to more users, which could ultimately catalyze diverse applications across industries.

As DeepSeek-V3 continues to gain traction, its implications for the future of AI and the pursuit of AGI cannot be overstated. The blend of innovative architecture, cost-efficient training, and powerful performance positions this model at the forefront of the ongoing AI revolution. As more companies embrace open-source technologies, the landscape will likely evolve into a more competitive, collaborative, and inclusive arena, benefiting not only the tech industry but society at large. In this exciting era, DeepSeek represents a significant stride toward not just understanding AI but fostering its intelligent evolution.

Leave a Reply