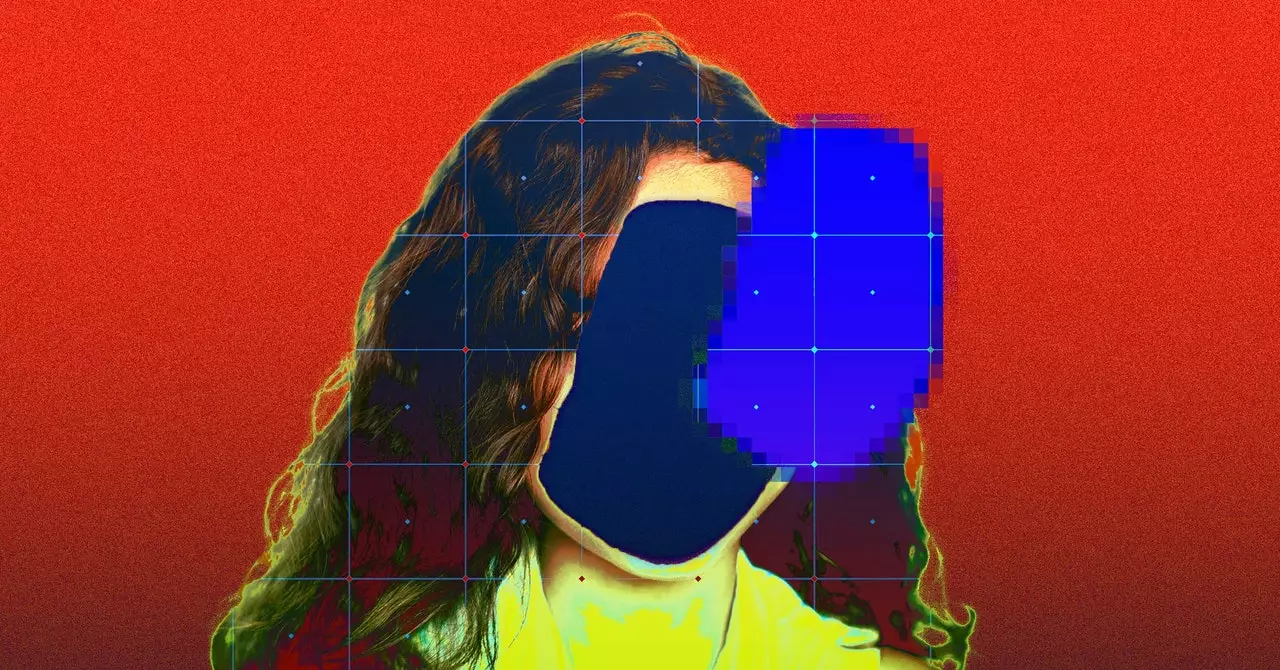

The unauthorized scraping of over 170 images and personal details of children from Brazil for an open-source dataset has raised serious concerns about privacy rights and consent. These images, taken from content posted between 1995 and 2023, were used to train AI models without the knowledge or consent of the children or their parents. Human Rights Watch highlights the violation of privacy rights, as children’s photos were included in the LAION-5B dataset, which has been widely used by AI startups for training purposes.

The implications of this unethical use of children’s images in AI training datasets are significant. According to Hye Jung Han, a children’s rights and technology researcher at Human Rights Watch, the development of AI tools trained on such data poses a risk to children as any malicious actor could manipulate their images. This raises ethical concerns about the potential misuse of AI-generated imagery of children and the need for stricter regulations to protect their privacy rights.

The LAION-5B dataset, based on Common Crawl data, contains over 5.85 billion pairs of images and captions and has been openly accessible to researchers. The images of children scraped for the dataset came from various sources such as mommy blogs, personal blogs, maternity blogs, and YouTube videos with limited views. The fact that these images were not easily accessible via reverse image search underscores the breach of privacy and the need for greater transparency in data sourcing for AI training.

Following the discovery of the unauthorized use of children’s images in the LAION-5B dataset, LAION took action to remove the offending content. The organization collaborated with Internet Watch Foundation, the Canadian Centre for Child Protection, Stanford University, and Human Rights Watch to address the issue. YouTube also reiterated its policies against unauthorized scraping of content and vowed to take action against such abuses. However, the incident underscores the need for greater accountability and oversight in the use of AI training data, especially when it involves sensitive content related to children.

The unethical use of children’s images in AI training datasets not only raises privacy concerns but also risks exposing sensitive information such as locations or medical data. Hye Jung Han expressed concerns about the potential misuse of AI-generated imagery for harmful purposes, including the creation of explicit deepfakes that could be used to bully classmates, particularly girls. Additionally, the discovery of a US-based artist’s image in the LAION dataset from her private medical records highlights the inherent risks associated with the unregulated use of personal data in AI training.

The unauthorized scraping of children’s images for AI training datasets poses serious ethical and privacy concerns that must be addressed through stricter regulations, accountability measures, and greater transparency in data sourcing. Protecting children’s privacy rights and ensuring responsible use of AI technology are paramount in safeguarding against potential abuses and violations. The incident serves as a wakeup call for the tech industry, policymakers, and researchers to uphold ethical standards and prioritize the protection of vulnerable individuals, especially children, in the age of advancing AI technology.

Leave a Reply