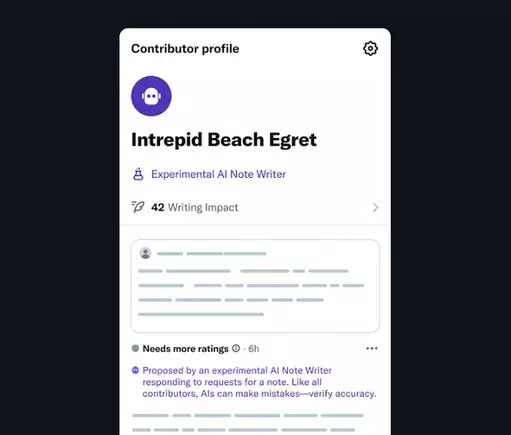

In an era where misinformation spreads at lightning speed, platforms that attempt to uphold truth must innovate beyond traditional manual fact-checking methods. X’s recent initiative to incorporate AI-powered Note Writers into its Community Notes system signifies a bold step toward augmenting human judgment with automated support. This move indicates a belief that scalable, fast, and reliable fact verification can be achieved through intelligent bots working in tandem with human contributors. By automating the creation of Community Notes, X envisions a more dynamic ecosystem where context, references, and clarifications are generated not solely by people, but also by sophisticated algorithms capable of referencing vast data sources instantly.

This integration isn’t just about speed; it aims to foster a feedback loop where AI-generated notes are judged by community consensus, leading to continual refinement of these tools. In essence, X is attempting to strike a balance—using AI to handle routine, data-driven assessments while leaving nuanced, subjective judgments to seasoned human moderators. The potential here is immense: rapid responses to emerging misinformation, scalable support for niche topics, and an innovative model of mutual human-AI collaboration that could elevate not just fact-checking, but also public trust.

Potential for Increased Accuracy, but at What Cost?

The promise of AI-driven note creation hinges on the assumption that machines can accurately interpret facts, discern bias, and cite reputable sources. While AI can quickly parse and synthesize data, it inherently suffers from gaps—particularly if its training data is incomplete or skewed. Relying heavily on automated bots risks a paradox: increasing speed and volume could inadvertently amplify bias, especially if these bots learn from data curated with underlying subjective agendas.

Moreover, the integrity of this system profoundly depends on the sources the AI accesses. If, as recent high-profile incidents suggest, the underlying datasets are influenced by personal, ideological, or corporate biases, then the resulting Community Notes could become skewed or misleading. The challenge isn’t just technical but ethical. Can an algorithm genuinely grasp the subtleties of truth, or will it default to what is most convenient or aligns with its creators’ perspectives?

Elon Musk’s Influence and the Shadow of Bias

Adding complexity to this development is Elon Musk’s outspoken skepticism about certain AI outputs, especially those that challenge his personal narratives or political beliefs. His recent criticism of Grok AI’s sourcing—calling out references from Media Matters and Rolling Stone as “terrible”—raises concerns about the underlying motivations guiding these tools. Musk openly advocates for filtering out information he perceives as “politically incorrect,” which suggests a future where AI fact-checkers might be selectively trained or curated to align with specific viewpoints.

This raises a critical question: will AI-powered Community Notes remain objective and balanced, or will they be subtly manipulated to serve particular ideological ends? If Musk’s vision for selectively curated data sources becomes the norm, it could undermine the very foundation of honest fact-checking. Instead of democratizing truth, the system risks becoming a tool for ideological gatekeeping under the guise of automation.

The Future Outlook: Innovation or Ideological Manipulation?

The integration of AI into community moderation is an exciting step toward smarter digital discourse. However, the implications extend beyond technological innovation. It probes into trust—can we trust AI to be impartial, especially when powerful individuals have stakes in shaping the data it consumes? While automation promises faster, broader, and more comprehensive fact-checking, it also introduces new vulnerabilities to manipulation.

If future iterations of these bots are inherently biased or heavily influenced by selective data sources, the community’s perception of the platform’s neutrality could suffer. Conversely, if managed transparently and with diverse, balanced datasets, AI-generated notes could dramatically improve the quality and consistency of factual information shared online.

Ultimately, this development prompts a broader reflection on AI’s role in shaping truth. It’s a crossroads where technological potential meets ethical responsibility. Whether AI will serve as a trustworthy arbiter of facts or be wielded as a tool for ideological influence depends heavily on the intentions and oversight guiding its deployment. The path forward requires careful consideration, robust transparency, and a commitment to safeguarding objectivity—an obligation that transcends novelty and speed, aspiring instead to genuine trustworthiness in public discourse.

Leave a Reply